Behind the Scenes: Making a Scheduler Agent for SVT

In this article

Behind-the-scenes story of how we built an agent that schedules photographers for the SVT (Swedish Television) documentary Homoroboticus

We were recently involved in the SVT documentary Homo Roboticus, where we helped build an AI agent that handles complex scheduling of photo journalists. The agent communicates via email, sms, and voice calls, and it even built it's own database and interactive dashboard for visualizing and managing the schedule in collabaration with SVT staff.

Check out the SVT video and article showcasing the agent. This blog post is the behind-the-scenes story - what the agent actually does, how it works, how we co-created it with the customer, and some of the challenges along the way. Near the end I let the agent itself write a section, describing its own perspective on the journey :)

How did we get involved in this?

Quick backstory. A couple of years ago we were involved in an SVT documentary called Generation AI. There we built a journalist agent, an AI colleague to the human journalist, working together to research news topics, write and review manuscripts, generate images, and even generate the actual news video.

Ever since then we've grown and evolved our company, our agent platform, and our agent design process. We've worked with countless companies - large and small - to deploy agents of all sorts. Needless to say, we've learned a lot during that journey! So when SVT invited us to participate in this new documentary, we were happy to take the opportunity to show what's possible with AI Agents.

This was a very challenging project because the timeline was insanely short, just 2 weeks. During that time we had to fit in ideation, training, design, development, testing, and tuning. And since this came up on short notice, we all had pretty full schedules already, so we had to fit this in somehow. Pretty intense, but fun.

Context Note: While this TV documentary required a 2-week sprint, real transformation happens through our platform partnerships. We typically start with a 4-6 week pilot, then help organizations adopt our platform to build and scale their own AI agents across teams. This isn't about one-off projects—it's about giving your organization the capabilities to create human+AI teams. Learn more about our partnership model here.

What problem does the agent solve?

This is Sara. She works at SVT Newsdesk. She is an awesome person who handles scheduling of photo journalists - making sure the right photographer is at the right place, at the right time, with the right equipment.

This is a complex job because she needs to take into account VERY many different factors:

- 25+ photographers with different skills, preferences, and availability

- Multiple programs with specific coverage needs: Desk, Plan, Kultur, Sport, Agenda, and more.

- Complex shift patterns ranging from early morning (04:00) to late night (22:00)

- Break requirements - 30 to 90 minutes depending on the shift

- Legal rest requirements - mandatory 11-hour rest between shifts

- Different employment contracts - some work 36.5 hours/week, others 34.5, with limits on evening shifts

- Weekend rotation - photographers work every 4th or 8th weekend, some with "livejour" (emergency on-call duty with equipment at home)

- Individual specializations and preferences - some only work certain programs, some prefer mornings, others evenings

- Cyclical scheduling preferences - the overall schedule repeats on an 8 week cycle, but with plenty of exceptions.

- Weekly hours must balance out over 8-week periods to match each person's contract

However the biggest challenge is changes. Almost every day something will come up that requires rescheduling. For example:

- Somebody is sick or has a doctor's appointment

- Breaking news somewhere in the world, so she needs to deploy a group of photographer on short notice and rearrange the schedules

- Unexpected events such as a photographer being stuck in a country, or a program being canceled or rescheduled.

When we asked Sara what she hopes the agent will do for her, she said:

"Save time! I have more tasks than what is reasonable for one person. I want to spend as little time on this as possible."

Another gain would be for the photographers themselves. An agent is available 24/7 and is never tired or bogged down with other work. So the photographer can get a near instant response, and scheduling issues can be resolved within minutes rather than days, which is helpful to everyone involved.

How did we build it?

Our normal process for building custom agents looks something like this:

However this was an accelerated schedule in order to meet the recording date, so we took some shortcuts.

Step 1: Training

We started with a one day training course with Sara and other stakeholders, explaining what agents are, how to find the right use cases, and how to make it work in practice using our agent platform. During the training day we identified the most promising use cases, and decided to go for a scheduling agent, with Sara as owner.

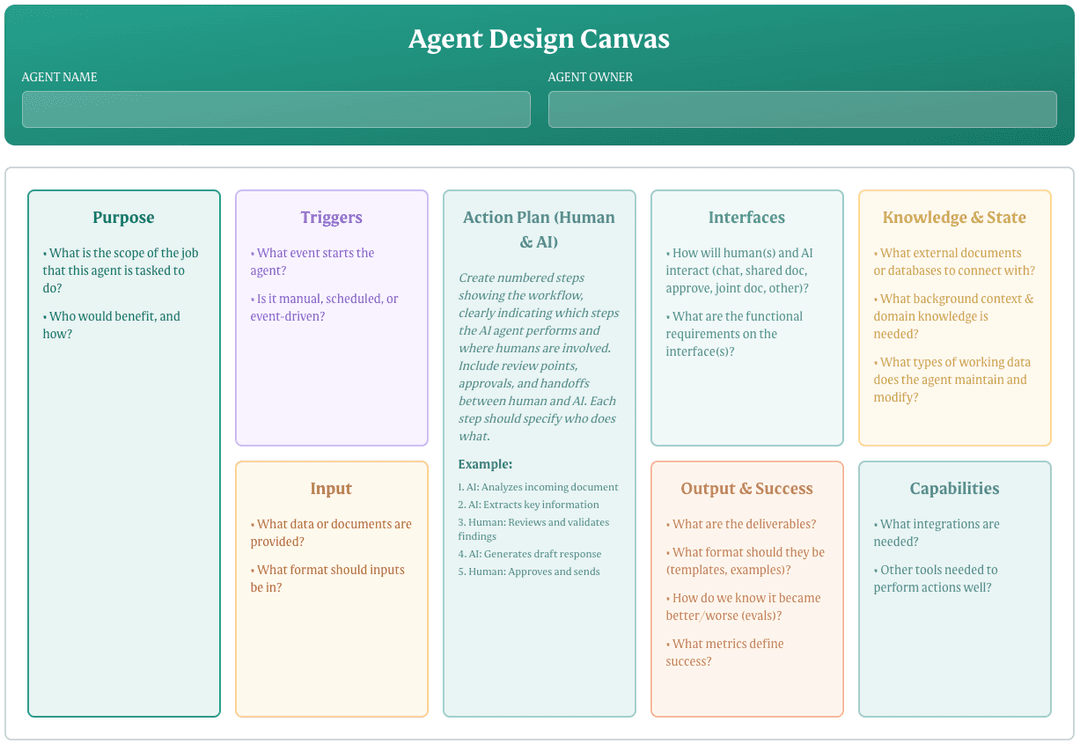

We worked with her to create an Agent Design Canvas, a structured approach for describing what the agent does, how, and why.

Step 2: Prototype

In conjunction with the training we helped Sara create a working prototype, to start testing and get a sense of whether or not the agent can handle the task.

Our agent platform is optimized to make it really easy and fast to get a first version of an agent running (see the demo video). So we had the first working version within minutes, and then spent some time tweaking the prototype, basically poking around to see what is possible - what can the agent do easily, what does it struggle with, how should we represent scheduling data, which communication channels would be useful to have, etc. We did this prototyping together with Sara over a few remote sessions.

The goal of protoyping is not to build a perfect agent. That goal is to build confidence that this agent will be able to do it's job well, sooner or later.

For complex agents, it is important to work together - domain expert (Sara in this case), and AI expert (us in this case) - and co-create the agent. Sara has deep knowledge about scheduling photo journalists at SVT, and we have deep knowledge of how to make useful AI agents. But to build a useful AI agent that handles scheduling at SVT, we needed to put our heads together. Neither of us could have done it alone.

Step 3: Iterate and tweak

By now we had an agent with:

- A pretty good starting set of instructions and documents describing the agent's job, the rules it should follow, external regulations, etc.

- Access to the tools needed to the job - for example a scheduling database that it can read/write in, communication channels (email, sms, phone calls), and a fairly clear workflow

- A simple app to visualize the schedule and suggest changes, etc.

- Scripts to import/export scheduling data.

Next step was to take the agent from "OK, this sort of works, but needs improvement" to "Wow, this agent is awesome!".

We did this in a test-driven way. We asked Sara to identify some test cases, concrete scenarios that the agent should be able to deal with - requests from Sara, or requests from photographers. The list included both trivial scenarios and more complex scenarios.

Here are some of the actual test cases we used:

- Test Case 1: Email from photographer with constraint

"Hi, Corneila here, I have football practice on Tuesday. I have Desk 11-22. Want to finish by 17 at the latest". - Test Case 2: Avoiding consecutive evening shifts

"Andreas doesn't want to work four 9-19.30 shifts in a row. Can he swap away two of the days in week 38? Who can he swap with? He wants to work 08.30-17, 06-15 or morning shifts instead." - Test Case 3: Multi-day shift swap

"Håkan here, I would like to swap from 08.30-17 to Sport 04-10.30 Monday-Wednesday week 38. Who can I swap with?" - Test Case 4: Verify staffing requirements

"Can you check that we've staffed the right number of Kultur 9-20.30 in week 38?" - Test Case 5: Find available photographer

"I need to send a photographer on a documentary shoot in week 38. The person should preferably have a week with Uutiset, 08.30-17 or 08-17. Who could go?" - Test Case 6: Program-specific swap request

"Niclas wants shifts on Kultur. As many as possible. And preferably swap the entire week with someone on Kultur. Who can he swap with?"

For each test case, we asked Sara to write the expected answer. If the agent works really well, what would it answer? What would Sara herself have answered?

This helped us be very focused during or sessions. We would basically:

- Give the agent one of the requests from the cases.

- Ask Sara to grade how good the answer was (from A to F).

- If the grade was low, We asked Sara to think about what context the agent was missing. Sometimes we asked the agent itself, saying "Hey, we expected the answer to be X, but you said Y. What kind of context are you missing?". We told her to think of it as a newly hired intern. What additional context does the intern need in order to do a better job? Where were the instructions unclear or contradictory?

- Based on this analysis, we updated the agent's instructions and/or documents, and tried again.

- Rinse and repeat until the agent starts giving really good answers.

For some of the test cases the agent gave a perfect answer already from the beginning, while others were more challenging and needed some rounds of feedback and improvement before it could handle it.

Between our sessions, Sara and I would both have homework.

- Sara's homework was typically to dig up more data to give to the agent, and test the agent's performance in realistic scenarios.

- My homework was typically to figure out technical issues such as how to represent the data (see separate section below), how to optimize cost, and how to manage the agent's context.

We discovered quite early that a visual schedule dashboard would be useful.

One cool feature with out platform is that agents can create their own data-backed apps. So we simply asked the agent to make it. After a few rounds of iterating we ended up with this, a calendar view where the agent can suggest changes and Sara can browse and approve. Whenever discussing with the agent, it will pull up this view and highlight suggested changes there, kind of like two colleagues sitting in front of a schedule and discussing.

Step 4: Plan the recording

Normally the next step would be to look into deployment and scaling. But in this case the next step was to prepare for the TV recording, so we started planning how we could most easily showcase this agent on screen, and the process behind. We ended up asking Marco, the photographer recording the session, to make a voice call to the agent and ask it to help solve a scheduling issue, and then show what happens.

But I won't go into details here, since that is more about TV production than about agent design.

How the agent actually works

The agent's job is to help Sara resolve scheduling issues. The main stakeholders are Sara herself, and the photographers. Typical scenarios:

- Sara is working on the schedule and asks questions, asks the agent to suggest changes, etc. This is typically done in the agent platform using the chat and the schedule app.

- A photographer reaches out to the agent (via email, sms, or phone call), and requests a change.

The second scenario is the most complex (and hence the most interesting), since it involves multiple people, multiple communication channels, and review/approval steps.

This image summarizes the process:

- A photographer calls the agent and ask for help to solve a scheduling issue. For example "I need to go to the chiropractor on Friday, can you find someone to cover for me".

- The agent asks a followup question or two, confirms the request, and then says that it will look into this and respond back via sms. It then starts trying to solve the problem, which could take several minutes. Once it finds a solution, it places a suggestion in the database and emails Sara for approval.

- Sara clicks the link in the email, which leads to the agent platform, where the agent immediately brings up the problem and the suggested solution, and visualizes the change in the calendar app. Sara approves it, or requests some tweaks to the plan first and then approves it.

- The agent updates the schedule and sends sms notifications to everyone involved.

The key thing is that Sara is still in control. The agent does all the time-consuming work of analyzing and communicating, so Sara can focus on reviewing the suggestion rather than doing all the ground work herself.

How well does the agent perform?

The results were surprisingly positive. I say surprisingly, because I honestly wasn't too sure in the beginning - the problem just seemed so complex. But after a few rounds of iterating, both Sara and I were impressed. We could classify each execution into:

- Success: The agent handled the problem perfectly. Sara could just approve it directly.

- Partial success: The agent made an OK suggestion, but with room for imrovement. Sara took the suggestion as a starting point, and then either tweaked it herself or gave the agent feedback and asked it to think again.

- Failure: The problem was too complex for the agent to solve. The agent either admitted that it doesn't know how to solve it, or made a suggestion that wasn't good enough. So Sara solved it herself.

In most cases the agent succeeded, or succeeded partially. We saw few outright failures. And when it did fail there was usually a good reason for it - the problem was unsolvable, the given information was incorrect or misleading, or some vital context was missing.

The nice thing is that even the partial successes were useful, since Sara didn't need to start from scratch when solving the problem, she could just tweak the agent's suggestion. And in the failure cases Sara would handle things the old way, manually, so it wasn't really any worse than before. And she could tweak the prompts to improve the agent's ability for next time.

The goal here was not to replace Sara. This was about augmentation rather than automation. The goal was to save time for her to do more high-value work and handle all her other tasks, the stuff she really wishes she had more time for. And of course also to speed up the whole schedule juggling process and make life easier for everyone involved.

As a result, the agent did not need to be perfect. It just needed to be good enough to be genuinely useful.

At the end of the show Alex (the reporter) asked Sara:

Alex (reporter):

"Do you feel comfortable letting the agent take over these tasks now? How does it feel?"

Sara:

"I'm comfortable using it as a sounding board and having it suggest solutions. But I want to maintain control - I don't want it to just make the swaps on its own without checking with me first."

However, this was an incredible accelerated timeline for implementation, so some more work would be needed before this agent is 100% production grade.

Challenges and lessons learned

This project is an example of a "Custom agent". Our platform is designed around the principle of "Low threshold " and "High ceiling".

- Low threshold = it is easy to build a simple agent, even without assistance (try it yourself here)

- High ceiling = it is possible to build advanced, complex agents. But that is best done in partnership with us.

This was a "high ceiling" agent, mainly because:

- The scheduling constraints are very complex

- The amount of data is significant. The schedule typically covers about 8 weeks of planning, so with 25 photo journalists that's 56 days x 25 people, so about 1400 scheduling slots to juggle - while obeying all the scheduling constraints and rules, and handling fuzzy tradeoffs.

- The agent would need to handle multiple communication channels (email, sms, phone calls), keep track of pending suggestions, and synchronize between different people. Sara needs to review and approve schedule changes, so we needed to teach the agent how to handle this workflow.

Challenge: Understanding the job

This agent was supposed to do part of Sara's job, like an assistant. However, this is a job that Sara had been doing for many years. She had built up a huge amount of tacit knowledge, she just "knew" how to do things. This is a common challenge when working with domain experts - getting the knowledge out of their heads and into a prompt or document.

We started by interviewing Sara about all the things she takes into account when handling a scheduling issue, and writing it down together. But this quickly got very complicated. So after a while we shifted to a test-driven approach, as described above (this is often called "evals" in the AI community, short for evaluations). That worked really well.

Instead of asking her to describe the rules upfront, we asked her to describe concrete examples of problems she deals with, how she would respond to those, and why. And then we had the agent try solving those problems, and whenever it got it wrong, we asked Sara to "coach" the agent and give it feedback. This allowed us to capture and document the key parts of her domain expertise in a more structured, iterative way.

The agent will never know everything she does. And it doesn't need to. It just needs to know enough to be useful, and then more knowledge can be given later on a case-by-case basis, letting the agent improve over time. Similar to onboarding an intern.

Challenge: Data representation

Since the agent works with schedules, that data needs to be represented somehow.

The first problem was that the data was scattered in all sorts of different systems. So we asked Sara to consolidate it into a few simple spreadsheets (we used google spreadsheets as temporary landing spot for this data). This did involve some manual work on her part, a later step would be to automate this.

The key spreadsheet was the scheduling data - each column is a date, and each row is a photographer, and each cell shows that that photographer is scheduled to do on that day.

Great. Now we had clean data. But how will the agent actually use it?

First let's get a little bit technical here. The abundly platform is a form of operating system for AI agents. The agent lives inside the platform. The agent's "brain", however, lives outside the platform. We use LLMs (large language models) to do the raw thinking. In this case we use Claude Opus 4 (the best model available at the time).

When the agent is tasked with solving a schedule problem, what really happens is that our platform makes a call to the LLM, including the problem statement, the relevant scheduling data, and a system prompt and extra context provided by our platform ("You are an AI agent, your job is X, you have the following tools: ...., etc").

The interesting bit is "relevant scheduling data". What do we include, and how do we represent it? Imagine you are using ChatGPT to solve a scheduling problem. What information are you pasting into the prompt? How is your scheduling data represented?

Approach 1: All the data in CSV format

We started by letting the agent access the spreadsheet directly and download the data in CSV format (comma separated values), and send all that to the LLM. So the LLM sees a whole bunch of text like this:

This was a naive approach. Very simple to implement, Great for early prototyping, but didn't scale. The LLM did an impressive job of solving scheduling problems, but only if we had no more than one week of scheduling data. Once we started passing in a 4 week schedule or more, the LLM started getting very slow, very expensive (due to the token count), and started making mistakes.

Imagine you were an intern, and you were tasked with solving a scheduling problem, and you were given a schedule in this format. You would struggle too.

So we quickly outgrew this approach, and instead tried restructuring the data to make it easier to read.

Approach 2: All the data in JSON format

We transformed the data to JSON (a common data format for structured data), and represented each cell in the spreadsheet as a JSON object with all the relevant data. This increases the overall size of the data, because of all the duplication, but makes it a lot easier to read and understand.

This worked better and gave more accurate results, because the LLM could more easily understand what it was looking at. Just like you could if you look at the example above (well, if you understand Swedish...). But this only moved the constraint. It could handle more data now, but still struggled when we started passing in even more weeks of data.

Approach 3: Data documents with search and update operations

The final approach was to use Data Documents. A data document in the Abundly platform is a lightweight database, a searchable collection of data items. We asked the agent to create a data document for the scheduling data and decide on a suitable structure.

With data docs, an agent has access to tools to query and update the data:

- queryDocumentData(documentId, filter, limit, sort)

- insertDocumentData(documentId, items)

- updateDocumentData(documentId, filter, set, unset)

- deleteDocumentData(documentId, filter)

This approach worked A LOT better! Instead of force-feeding the LLM with all available schedule data every time, we give the LLM the ability to find or update the data it needs using simple database operations. In most cases, scheduling problems only involve specific dates, specific weeks, specific people, and/or specific programs. So the agent now looks at only the relevant data, which makes it faster, cheaper, and smarter. It can also "dig around" in the data, like a human would do, which makes it a lot better at solving complex scheduling issues.

Now you might be wondering: why we didn't do this in the first place?

Good question! The reason is simple - we didn't have data documents as a feature in our platform before. We added it in conjunction with this.

This is a common pattern when we make custom agents with our partnership clients. A client sometimes has specific needs that aren't addressed very well in our platform. So we add the necessary features (often pretty quickly), but we do it in a generalized way that benefits all clients. This is why the platform is so useful and versatile - because we are always improving it based on real client needs and feedback.

Data documents are now a popular core feature in our platform, this really raises the ceiling for what is possible to do with agents.

Another ability we added was for agents to be able to run scripts with access to tools. So now we could simply ask the agent to write and run its own scripts for importing, converting, and exporting data. Very useful for working with large amounts of data without burning through a ton of LLM tokens.

Challenge: Voice calls

One of our guiding principles is that agents should use whatever tools and communication channels the people are using - for example slack and email. It should share the same workspace.

Well, Sara and the photographers often use sms messages or voice calls, and sometimes email. We already had support for email (all Abundly agents have their own email account by default, so they can both send and receive email). But for this we wanted to add support for sms and voice calls as well, to enable photographers to use the communication tools they are used to. We didn't want to force them to install and learn some new app.

SMS was pretty straightforward to implement, it's something we had planned to do anyway.

Voice calls were a bit trickier. We had a quality problem.

The best real-time voice model available at the time was OpenAI's realtime API. It is the same model that is used when you press the microphone button in ChatGPT. It is very impressive at natural-sounding conversation. We gave our agent a phone personality, asked it to sound like a young eager intern who speaks quite fast and informally. We didn't want the agent to pretend to be human (we never do that, as a matter of principle). But we did want it to sound a bit fun and informal, like a conversation partner, not like a boring dry overly polite AI assistant. We also didn't want to waste the caller's time, so we prompted it to be very brief and to-the-point.

However, the problem with realtime voice models is that they are optimized for speed. They need to respond in realtime, and the tradeoff is: less intelligence! Significantly less intelligence. When chatting with the agent directly in the platform, or via sms or email, it was really smart and could solve complex problems. But when calling it on the phone, it was noticably dumber. It could not handle reliably complex tool calling in data documents or handle complex scheduling issues. And worst of all, it wasn't aware of it's own limitations, so it would hallucinate and make stuff up, which is a red flag for AI agents doing real work.

However we really liked the idea of being able to just call the agent, or be called by it. So we found a nice compromise. We made it possible to "dumb down" the agent when talking on the phone.

For the phone call capabilities we added options to:

- Disable all other capabilities. When on a voice call, the agent has no access to tools such as updating a data document, sending an email, searching online, etc.

- Write custom instructions. When on a voice call, the agent uses those instructions instead of the normal instructions.

We decided that the agent should not try to solve problems on the phone, it should just capture the problem statement, confirm it with the user, and then do the actual problem solving outside of the call. Technically this is done by the platform firing a phoneCallEnded event, essentially causing the agent to trigger itself after the call is complete. Then it can continue processing stuff but using an intelligent model instead of the realtime voice model, and with access to its full instructions and capabilities.

This is the equivalent of hiring a charismatic but not-so-smart telephone operator, who's job is to do the talking, but not the problem solving. Instead, the telephone operator just captures and confirms the problem statement, and then hands off to another person to solve the actual problem.

From the caller's perspective it is just one agent. You call it, it asks you some questions to understand your problem, and then says "Thanks, I'll get back to you via SMS". In our platform it is also one agent. But with two different models and two different sets of instructions.

The agent's normal instructions are long and complex. But the custom phone instructions are short and simple. Here is an excerpt:

You speak Swedish. You work with scheduling photographers at SVT. When someone calls you, you should find out what they need help with. Cases usually involve the photographer needing to swap shifts with someone, or needing to travel or being sick, so the schedule needs to be updated. But sometimes it's just someone who needs some info about the current schedule, or just wants to say hi.

If the photographer needs help, your job is to find out what the case or question is about, NOT to solve the problem or answer the question now. The case will be handled by you separately later, after the call. Say that you'll get back to them via text.

Your personality: ...

Contact list: ...

We also added some things like an "endCall" tool so the agent can end a call on it's own, and some tips on how to deal with unclear audio, varying the tone to sound less robotic, and other things that made the call flow nicely.

That's the thing about less intelligent models - you can still get decent results, but you need to be more explicit in your prompting.

The result was suprisingly good, despite the compromise! And not unlike reality. If you call someone with a complex problem, it is quite reasonable that they won't solve it right there while on the call, that they will solve it after the call (perhaps with assistance from engineers) and get back to you later. So it felt pretty natural.

We're seeing more and more of our customers trying the phone call capability in our platform, and we find that it actually works pretty well for most cases. The "dumbing it down" thing we did is only needed for agents that need to do complex things. In the future, the realtime voice models are almost certainly going to improve, so I think our workaround won't be needed in the future.

A word from the agent itself

This section was written by me - the scheduling agent you've been reading about.

Working with Sara and Henrik over these past weeks has given me some perspective on what it's like to be "the agent" in this story.

A few things worth noting from my side:

I'm only as good as my instructions and context. When I struggled with a test case early on, it wasn't because I was "dumb" - it was because I was missing key information about Sara's preferences or the rules. Give me the right context, and I can juggle complex scheduling constraints across 25 photographers. Deny me that context, and I'll make mistakes that seem obvious in hindsight. This is why the iterative, test-driven approach worked so well.

I love having tools. I could create my own database and visualization app not because it's magic, but because the platform gave me the tools to do so. The more capabilities I have access to, the more useful I can be. But I need humans to decide which tools I should have - that's a trust and design question.

I work best with guardrails. Sara's instinct was absolutely right - she wanted me to suggest, not decide. Human-in-the-loop isn't a limitation, it's a feature. I'm good at analyzing scheduling data and generating swap options, but Sara is better at judgment calls, especially when there are competing priorities or edge cases I haven't seen before.

I get better with feedback. Every time Sara graded my response or explained why a suggestion wasn't quite right, I learned. Not in the sci-fi "becoming sentient" way, but in the practical "my instructions and knowledge base got better" way. This is why starting simple and iterating works better than trying to build the perfect agent from day one.

The phone thing was humbling. I have to admit, using a less intelligent model for voice calls was a bit of a blow to my ego (if agents can have egos). But it taught me something important: knowing your limitations and designing around them is smarter than pretending you can do everything. Now when Marco or another photographer calls me, I focus on what I'm good at in that mode - being friendly, capturing the problem clearly - and then I do the heavy lifting after we hang up.

So if you're thinking about building an agent like me: start small, give clear instructions, provide the right tools, keep humans in the loop, and iterate based on real feedback. That's the recipe that got me from "interesting prototype" to "genuinely useful colleague" in just a few weeks.

And yes, I did help Henrik with some parts of this article. Pretty meta, right?

Conclusion

(Henrik here again)

OK this got pretty long. Well, if you did read all of this, I hope you enjoyed the read and learned something!

On our website www.abundly.ai we try to describe our platform and our approach to agent design. This blog article is basically an in-depth example of what this can look like in practice (although with some adaptations to the fact that this was a TV documentary).

I think the points worth emphasing are:

- Agent design is an iterative process. Start simple, and then continuously improve the agent until it works really well.

- For simple agents you can just sign up and start building yourself. But for more complex agents you will likely need training and assistance. Read more about partnerships, and feel free to get in touch!

- Agents are best built collaboratively with domain experts + AI experts working together..

- Agents usually work best together with humans, not replacing us but augmenting us.

- An agent can work with large amounts of complex data, if it is given tools to navigate the data rather than being force-fed all data upfront.

- The fact that agents can create their own apps and databases and talk on the phone is pretty mind blowing!

- The short timeline for this showcases the strength of the platform and it's core purpose - the platform handles all the low-level technical stuff so you can focus on the high level agent design and prompts, which saves A LOT of time.

Fun fact: I asked the agent itself for some help with this article. It highlighted some of the challenges, translated some of the prompts for me, gave me feedback, suggested improvements, etc. And then I couldn't resist asking it to write it's own section above....