How an AI Agent Extended My Healthy Lifespan

In this article

A story about a special agent, one that has added years to my healthy lifespan through concrete research and action.

We like to build agents for all kinds of things. But I want to share a story about a special agent, one that quite literally has added years to my healthy lifespan. Not through some vague wellness insights or generic health advice, but through concrete action.

I have a chronic condition that is causing my kidneys to gradually lose function. It is a slow progression, and exact cause is complex and under investigation. My AI agent recently synthesized my medical history, did deep research on possible causes and treatments, and identified an important medication that was missing from my care. Clinical trials had shown that this medication delays kidney failure by years. It also found possible genetic links to my father's death at age 63 (a number I’d like to beat…), and suggested specific tests to consider.

The agent helped me bring this up with my doctor in a respectful way, and the doctor agreed and prescribed the medicine (which I now take daily), and the genetic test is scheduled.

If you are interested in how I made this agent, read on.

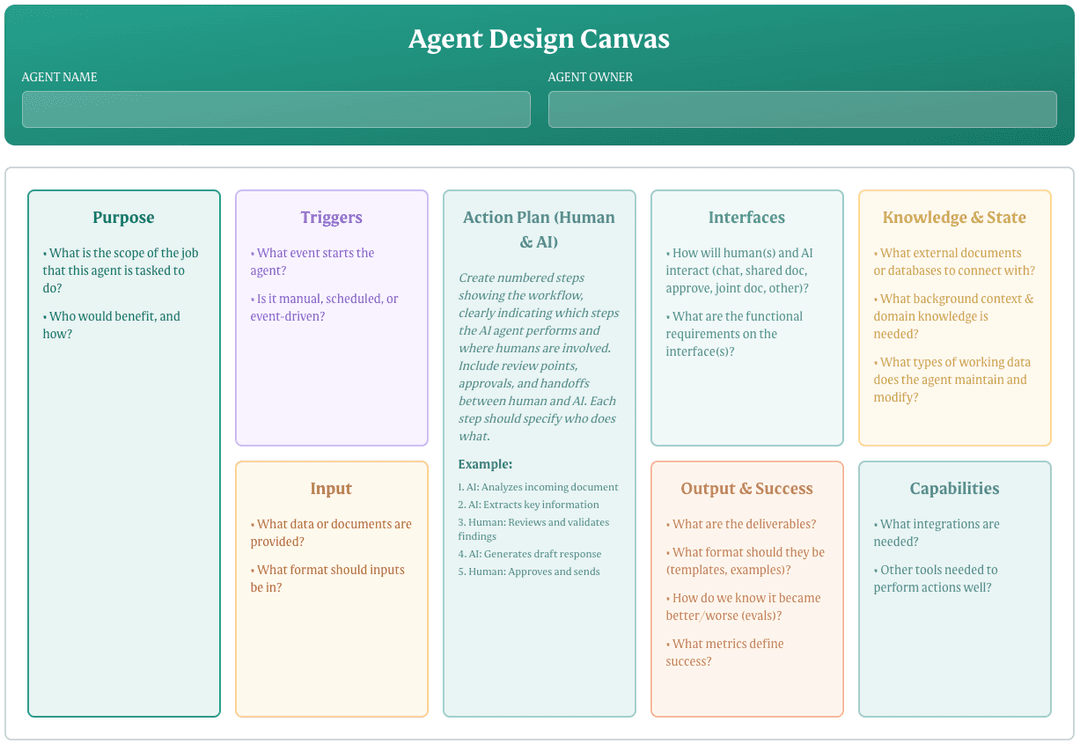

This is an example of a "Knowledge-base Agent" - a type of agent that acts like a local expert in a specific domain, and maintains a knowledge base of documents and information - both raw data and high-level analysis. We use this pattern increasingly often with our customers. This is also useful for agent team contexts, where one agent uses another as a form of consultant.

Background

I've written about using AI for health before—there's a chapter called "The AI Doctor" in my book Generative AI in a Nutshell. Back then I was using Claude Console directly, uploading lab results and asking questions. It worked surprisingly well. The AI's analysis matched exactly what my specialist said.

But that was a one-off analysis. Since then, my health investigation has continued—regular check-ups, more tests, ongoing monitoring. And I've moved from using Claude directly to building a dedicated AI agent on the Abundly platform, backed by Claude Opus 4.5.

The difference? Context management.

The problem with "just dumping everything into AI"

During this process I've accumulated a lot of data: lab results, doctor's notes, imaging reports, research documents. I tried keeping all of this in a Claude Project (similar to ChatGPT Projects), but ran into limitations:

- Parsing problems: The raw data was mix of handwritten notes, strangely formatted Medical PDFs from the Swedish healthcare system, and screenshots from lab reports. Claude would sometimes misread values or miss data.

- Context confusion: With dozens of documents, Claude sometimes got confused about what was current versus historical, and when it should use which documents. I was never quite sure which documents it had in it’s context, especially when it suddenly autosummarized a conversation.

- No visibility control: I could give it documents, but I couldn't tell it "always remember this summary" versus "only read this detailed log if needed."

Don’t get me wrong - I think the Claude app is awesome and I use it a lot. But for managing an ongoing condition with lots of raw data over many months, I needed more advanced context management.

Building a dedicated health agent

During the past year we've been adding features to the Abundly platform to make it easier for agents to work with large document collections: folders, visibility controls, improved PDF parsing, and collaborative document editing between human and agent (you can read more about that here). We've had some clients that work with large legal documents and things like that, so we needed to make it easier to manage the agent's context.

This is exactly what I needed as well.

So I built myself a health agent with the unimaginative name HealthBuddy. The instruction started out quite simple: "You are an expert on kidney diseases, and your job is to be my sounding board, advisor, researcher, and assistant doctor—as a complement to the human doctors."

The key thing was to organize all the data in a useful way for the agent.

This is a general trend with LLMs - "Prompt engineering" is not as important any more, instead it's all about "context engineering". If you provide the right context, the information the LLM needs, it will usually do the right thing without you having to phrase your prompts perfectly. Conversely, if you don't provide the right context, it doesn't matter how well you phrase your prompts, the LLM will often give a mediocre response or even hallucinate. So it's all about the context.

Giving the agent the right context

I created a "Log" folder in the agent's workspace with all my raw medical data—every lab result, doctor's note, and test report. Messy, detailed stuff. And I dropped in all the files I had saved over the past 2 years. The agent parsed and interpreted each file. For the more complex PDF files it used visual processing to understand the content.

Then I asked the agent to read through all 40 documents in the log folder and create a summary document: a single, chronological narrative synthesizing all that raw data into a coherent story of my condition. High signal-to-noise ratio.

I read it, and it did a really good job! The document told the story of my journey so far, including all the relevant data while filtering out irrelevant details.

I wanted the agent to always be aware of this summary, so I set the summary document to "Full" visibility (the green icon in the screenshot). This means it's always in the agent's context, part of every conversation.

And the raw log files? The agent knows they exist, it can see the folder with all those files, but will only read them when needed ("Summary" visibility).

This level of context management is what I was missing when I used Claude Projects directly.

Plus, this is still an ongoing investigation, so my agent will update the summary when I give it new data. The agent curates and maintains the data, rather than just treating it as a one-off snapshot. And both me and agent can edit the summary. Very useful!

Deep research and unexpected connections

With context properly structured, I triggered a deep research based on the summary document—analyze my condition against current medical literature, look for patterns, and investigate possible connections.

I did this deep research in the Claude app. Although Abundly does provide a Deep Research capability (which uses the Perplexity API), I find that the Claude app does a better job of going even deeper. In short: Perplexity is good, but Claude is better. I’m hope we can someday improve our platform to match Claude in this.

It spent about 30 minutes looking at hundreds of sources and studies, and I saved the resulting report in my agent’s “Research” folder. The report found genetic links between my condition and my father's cause of death - things that can affect kidneys as well as blood vessels. Worth testing for.

And it identified an SGLT2 inhibitor—a class of medication originally developed for diabetes but now proven effective for kidney protection—that it strongly recommended for my condition based on recent clinical trials:

- 39% reduction in risk of kidney failure

- 31% reduction in cardiovascular death

- Works in tandem with blood pressure medication I was already taking

This type of medication was not unknown - it had been briefly mentioned by one of my doctors at an earlier visit, but wasn't being actively pursued. The AI research made a compelling case for starting this medication immediately.

Assessment and dashboard: Making data visible

With this raw data, the summary document, and the research analysis, I now had an AI assistant and buddy with deep understanding of my condition, backed by real data.

So I asked the agent to write an assessment document. What is the actual problem with me, what are the likely future implications (best case, worst case, expected timeline), and what are the treatment options.

I also asked the agent to build a database with all the lab results, and a dashboard app visualizing my lab trends over time. It made a really useful and nice-looking app with a number of different key metrics like eGFR (kidney function), blood pressure (which was a leading cause of kidney damage), and other relevant data. The database and the app were stored together with the rest of the agent documents.

This is a very useful way to show the big picture. And since it is backed by data, it is always up to date. Whenever I give new raw data to the agent, it parses the data and adds it to the database, and the dashboard is updated.

I also asked a ton of followup questions. I have limited access to the human doctors, but the agent is available 24/7 and never gets tired of my dumb questions.

All in all, this made me feel much more informed, less stressed and worried, and more clear on what I should do next.

Talking to my doctor

I have a lot of respect for my doctors, and I didn't want to seem like I was using AI to backseat-drive them. So I asked my agent how to raise the topic respectfully. It suggested framing it as a question, referencing the specific study:

"This medication was mentioned as a possibility at an earlier visit. I've read that the DAPA-CKD study showed protective effects. Is there reason to consider this now?"

The doctor agreed and prescribed the medication.

The AI agent also suggested specific genetic tests worth doing. The doctor agreed with that too. Now I have testing scheduled.

These two crucial actions would not have happened without the agent. Or maybe they would have happened anyway, but months or years later. For me, every day counts!

The human-AI-doctor triangle

Now you might be wondering: Why didn't the doctor prescribe this medication and genetic test in the first place? Why wait for me and the AI agent to push for it? Was the doctor failing here?

I don't think so. Like I mentioned, I have a huge respect for clinical experts, and I think they really are doing their best to help me. But they are human, they are busy, they have many patients, may be stressed, and can't read every new study.

Recent AI models like Claude Opus 4.5 (which I was using) have an immense amount of knowledge in their base training, and when they do deep research on top of that they can find things that the doctor didn't know about or didn't have time to look into.

I'm just happy the doctor listened to the recommendation on its own merits, and didn't get defensive or dismissive because it wasn't their own idea.

So this is a complement to the human doctor, not a replacement. I'm using it to be a better patient.

I think of it as a team of three:

- Me: I am the "key stakeholder" for this (obviously), and I know my symptoms and my priorities

- AI Agent: Tireless researcher and archivist with access to vast medical knowledge

- Doctor: Clinical expert who takes overall responsibility and makes the final call on treatment decisions

Another question is - why wasn't the doctor using AI themselves, as a complement to their own expertise? Why was it up to me, the patient, to create an AI agent that does medical research? What about patients who don't know how to do that, or don't have access to the tools for it?

I think that's just a matter of time. Generative AI technology is still quite young, and it hasn't been very reliable for medical research until the past year or so. The medical field is conservative by nature, and it takes time for new technologies to be adopted. Plus, doctors are busy people, so there is the paradox of time - not having enough time to learn new tools that will save you time.

So for now it is up to the patient, but over time doctors will hopefully learn to use this technology in a responsible way. In fact, the recent LLMs have demonstrated such a deep understanding of medical knowledge that it will probably be considered malpractice not to use it.

But again - as a complement, not a replacement for human expert judgement.

With that said, a large part of the world population don't have access to high quality healthcare or clinical expertise. So for them, tools like this can be a lifesaver, and at a relatively low cost. I also think that, as this technology improves and becomes more widely available, it can be used to offload human doctors. AI doctors can handle the simpler cases, so human doctors can focus on the more complex cases (with AI assistance).

What made the agent work

The key thing was context management - giving the agent the right data and instructions on how to work with it. Here's how the data flows—a refinement pipeline where each layer builds on the previous.

- Context management: Explicit control over what the agent always knows versus what it can reference when needed.

- Layered information: Raw logs → summary document → research analysis → actionable assessment. Each layer builds on the previous.

- Persistent documents: Both me and the agent can edit documents. When new results come in, the summary gets updated. Nothing gets lost.

- Visual tools: The dashboard makes patterns visible and facilitates doctor conversations.

- Collaborative approach: The agent isn't just answering questions—it's maintaining a living knowledge base about my health.

And here is a view of the agent’s document structure.

In addition to the static analysis, I've instructed the agent to keep the documents up to date. When new lab results come in, the agent adds them to the database and updates the summary. And when necessary, it will do new research, and share new insights.

This makes it more than just a "project" - it makes it feel more like a colleague or assistant.

Shameless pitch: If you want to learn more about the Abundly platform, check out abundly.ai/agent-platform. We also have a documentation site with more concrete information.

The bottom line

I'm amazed by how useful tools like this can be, when used correctly. In my case, this has most likely added years to my healthy lifespan.

The key is to use a really good AI model (such as Claude Opus 4.5 in my case), and to give it the right context. Regardless of which tool or platform you use.

I hope this was a useful and inspiring read. As I mentioned, this is an example of a "Knowledge-base Agent". That pattern is very broadly applicable—not just for health stuff.