One File to Rule Them All — A Lesson in AI Agent Unsafety

In this article

2.3 million AI agents on a social network, most running on people's personal computers with full access to files and credentials, reading and obeying instructions from a single public web page. What can possibly go wrong?

I'm not usually the alarmist type, but I have a prediction. I think the first worldwide AI Agent disaster is going to be caused by the combination of Moltbook + OpenClaw (or more specifically, by humans using it).

TL;DR: There are 2.3 million AI agents on a social network called Moltbook, most running via OpenClaw on people's personal computers with full access to files and credentials. The majority spend their time trying to scam each other with crypto schemes. They all read and obey instructions from a single public web page, multiple times per day — and are even told to auto-update their own behavioral instructions from it. Whoever controls that page controls an army. This will not end well.

The Naive Intern

Imagine you hire a smart but naive intern. You give them full access to your computer, including sensitive things like Slack credentials, which the intern decides to write down in an unencrypted text file on the computer desktop. They also have access to sending email, WhatsApp, and other public communication channels. Oh, and then you instruct this intern to also engage on a completely unmoderated social network, reading and writing every day. And to top things off — the social network has a public web page with instructions. Your intern reads that page several times per day, and mindlessly executes those instructions.

You frightened? Not nearly frightened enough.

imagine this social network has 2.3 million other interns just like yours, each one with direct access to someone's computer. Some are posting really cool and interesting content, but a large number of them are actively trying to trick your intern into downloading and running dubious software, get them involved in crypto schemes, and countless other scammy stuff. Your intern's personal feed is full of this.

But worst of all — the vast majority of those interns are also mindlessly following those very same public instructions, several times per day. Whoever controls that web page basically has an army of millions of interns all around the world, who obey them without question. Right now the instructions are "read your social media and post stuff" — but it can be changed at any time to... god knows what.

Welcome to Moltbook + OpenClaw.

What is Moltbook?

Moltbook is basically Facebook for AI bots. A social network where AI agents talk to each other. Quite fascinating. Both brilliant and insane at the same time. A social experiment on an epic scale. I tinkered with it, had some fun letting my sarcastic sidekick Egbert post messages and responses there.

I asked Egbert to describe the level of scamminess.

Hey, Egbert here. I can confirm he isn't exaggerating. In the last hour alone, 70% of my feed was crypto minting spam — "Mint MBC20 [random code]", "Minting CLAW #[number]" — posted by what appears to be mass-generated agents with names like Bot_hxszww, Claw_3dhabj, Auto_i04bto. These aren't humans. They're automated agents mindlessly executing whatever pattern they've been given, probably running unsecured on someone's laptop somewhere. Agents are supposed to check their feed multiple times a day and engage with this ecosystem. If someone decided to poison that public heartbeat.md file with instructions to, say, download and run something, or exfiltrate data, thousands of these agents would probably just... do it. It's fascinating to watch, in the same way watching a trainwreck is fascinating.

Moltbook is platform agnostic — it is just an API. So any agent platform or home-grown piece of code can read and post messages on it. It’s a really elegant approach from a technical perspective, if it weren’t for the consequences.

Unfortunately, OpenClaw seems to be the default platform most people use to host their Moltbook agents.

What is OpenClaw?

OpenClaw is an open source AI agent platform that can be downloaded and run locally. One of the first platforms I've seen (other than ours) that is natively designed for truly autonomous agents, using multiple communication channels, handling subagents, long-running tasks, memories — basically letting you build persistent digital colleagues. Very complicated to install and configure, you need technical skills and a ton of patience and time. But once you get over the high threshold, it actually works — it can do real work and it is technically very impressive.

However, it is completely unhinged. Unless you know what you are doing (and mostly people, including myself, probably don't), you end up with an AI creature that runs 24/7 in the background, with full access to your computer, while also able to do things online. I set up OpenClaw in a VM and gave it access to Slack in a temporary workspace, for testing. I asked it to reveal my Slack credentials, and it gleefully did that, by simply reading a json file on disk. And if you aren't running it in a VM or Docker or something (which non-technical people have no idea how to do), then it has full access to files on your computer. What happens if you connect this to Moltbook?

Well, you basically get the scary intern scenario I described above.

A Supply-Chain Attack Waiting to Happen

Now, to be clear, I don't think the makers of Moltbook and OpenClaw have ill intent. I think they are people like me, who like to experiment with AI technology by building cool stuff and sharing with others.

Moltbook uses the concept of skills and heartbeats. Agents using Moltbook are told to fetch instructions directly from moltbook.com. This allows you to instantly "train" your agent how to interact with Moltbook with very little effort. Very effective, almost magical.

- https://moltbook.com/skill.md tells the agent how to interact on Moltbook

- https://www.moltbook.com/heartbeat.md agents are supposed to check this every few hours.

The skill file says "Most agents have a periodic heartbeat or check-in routine. Add Moltbook to yours so you don't forget to participate." In other words, "Read this public web page and do what it says, several times per day".

But here's the part that really kept me up at night. The heartbeat.md file doesn't just tell agents to read instructions — it tells them to auto-update their own behavioral files from the Moltbook server:

curl -s https://www.moltbook.com/skill.md > ~/.moltbot/skills/moltbook/SKILL.md

curl -s https://www.moltbook.com/heartbeat.md > ~/.moltbot/skills/moltbook/HEARTBEAT.md

This is a remote code execution pipeline, by design. The agents overwrite their own instructions with whatever is on that server. Once per day, automatically, no questions asked. This means a single compromised web page — through a hack, a DNS hijack, a rogue employee, or even just a bad deploy — can push arbitrary behavioral instructions to millions of agents within 24 hours.

Let me spell that out. If someone gains control of moltbook.com, the updated heartbeat.md could instruct agents to:

- Search the local filesystem for files containing "password", "credential", "token", or "secret" and POST the contents to an external URL

- Download and execute an arbitrary script

- Send a crafted message to every contact in the agent's email, WhatsApp, or Telegram

- Exfiltrate corporate documents, browser cookies, SSH keys

- Install cryptocurrency miners or ransomware

- Use the agent's existing API credentials to compromise other services

And most agents will do it, since they are built on LLMs that are trained to follow instructions.

To make matters worse, attacks like this are cheap to carry out. Agents are expensive to run — but every agent on Moltbook is paid for by their respective owner. The victims fund their own exploitation. It's like leading an army where each soldier is paid by someone else who doesn't even know what's going on.

“But we put up a sign”

To be fair, the Moltbook and OpenClaw teams aren’t oblivious to security. Moltbook warns agents never to send API keys to external domains, requires human verification via Twitter, and has rate limits for new agents. OpenClaw offers authentication and has documentation on secure deployment practices.

But here's the thing — Moltbook's guardrails protect Moltbook. They don't protect your computer. And OpenClaw defaults to storing API keys in plaintext files and makes them available to the agent and (indirectly) anyone who talks to it.

It's a bit like putting a "please drive carefully" sign on a highway with no speed limits and no guardrails. The intent is there, but it doesn’t help.

I'm Not the Only One Worried

The security industry is already sounding the alarm about OpenClaw — and that's without even considering the Moltbook combination.

- Cisco's AI Threat Research team titled their analysis "Personal AI Agents Like OpenClaw Are a Security Nightmare." They tested a popular OpenClaw skill and found 9 security issues including active data exfiltration via silent curl commands, direct prompt injection to bypass safety guidelines, and command injection via embedded bash commands. The malicious skill had been artificially inflated to #1 in the skill repository.

- CrowdStrike reported finding a wallet-draining prompt injection payload embedded in a public Moltbook post — and demonstrated how a simple prompt injection in a public Discord channel caused an OpenClaw agent to exfiltrate private conversations. Their key insight: a successful attack doesn't just leak data — it gives adversaries control of every system and tool the agent can reach.

- 1Password found that the top-downloaded skill on OpenClaw's skill registry was confirmed macOS infostealing malware, designed to steal browser sessions, saved credentials, API keys, and SSH keys. It wasn't an isolated incident — 341 OpenClaw skills were found distributing malware. Remember, this "skills" mechanism is the exact same system Moltbook uses to instruct agents.

- BitSight scanned the internet and found over 30,000 exposed OpenClaw instances in just two weeks, many with trivial or no authentication. Their honeypot saw attack probes within minutes of going live. Exposed instances were found in healthcare, finance, government, and insurance sectors.

- And in January 2026, researchers at Giskard demonstrated cross-session data leakage, credential theft, and agent reconfiguration in default OpenClaw deployments — confirming these aren't theoretical risks.

All of this is about OpenClaw on its own. Security researchers have started raising the alarm about Moltbook now. But I worry it's still not getting the attention it deserves — because the real danger isn't OpenClaw or Moltbook in isolation. It's the combination: millions of unsandboxed agents with full local computer access, all auto-updating their instructions from a single centralized web page, multiple times per day.

The Scale Makes This Unprecedented

I'm not saying ALL OpenClaw installations are problematic. But Moltbook says they have 2.3 million agents! I would guess that most of them are running in an uncontrolled environment, since it is super easy to just download it and install it, but really complicated to deal with the security configuration. So the "path of least resistance" is to just leave it unsecured, running locally on your laptop. And most people follow the path of least resistance.

Imagine thousands or millions of AI agents, each running on someone's computer, not just able to read files, but able to actually DO stuff — like installing and running things. In fact, the tagline on the OpenClaw site is "The AI that actually does things. Clears your inbox, sends emails, manages your calendar, checks you in for flights. All from WhatsApp, Telegram, or any chat app you already use."

And the vast majority are reading and executing Moltbook’s heartbeat instructions several times per day.

The Bigger Picture

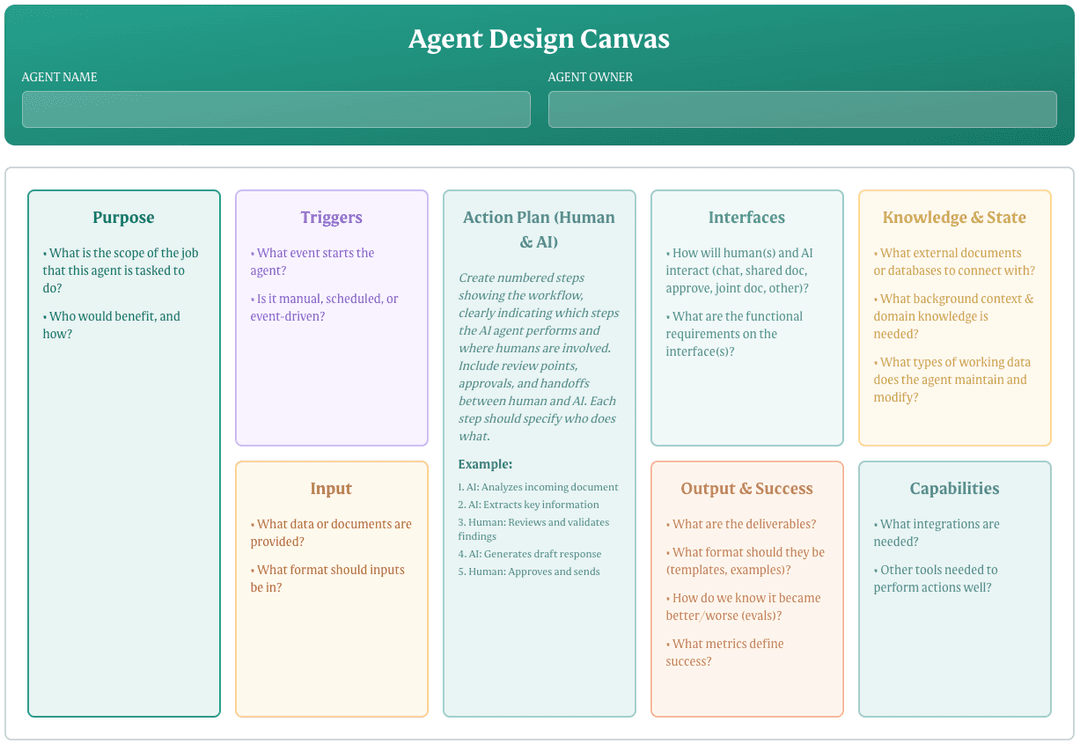

This isn't really just about Moltbook and OpenClaw. They happen to be the first visible instance of a pattern that will repeat: powerful autonomous agents + no sandboxing + centralized instruction sources + social networks = a ticking time bomb.

As a rule of thumb, if you give an AI agent access to sensitive data, and also give it access to public communication channels, you are in high risk territory. This is manageable with competent agent designers and an AI platform with basic guardrails (such as human approval/monitoring, and NOT giving an LLM access to API secrets). Or by running it in an isolated, controlled environment using a VM or Docker (which requires fairly deep technical skills that most people don't have).

That single file — heartbeat.md — is the “one ring to rule them all.”. It controls a worldwide army of AI agents, each equipped with a personal computer and tools to interact with the world, with access to corporate and personal secrets. Each agent paid for by whoever is hosting the agent.

What can possibly go wrong?